What Is Cloud Observability and Why It Matters

Cloud observability extends beyond traditional monitoring by providing deep insights into system behavior through comprehensive data collection and analysis. While monitoring answers whether systems function correctly, observability explains why they behave in specific ways, enabling engineers to debug complex distributed systems effectively.

The shift from monolithic applications to microservices architectures has made observability essential. Modern cloud applications consist of dozens or hundreds of interconnected services, each potentially failing in unique ways. Traditional monitoring approaches that check predefined metrics fail to capture the emergent behaviors in these distributed systems.

Observability empowers teams to ask arbitrary questions about system behavior without pre-instrumenting for specific failure modes. This capability proves critical when investigating novel issues that monitoring systems never anticipated. Engineers can explore data freely to understand root causes rather than relying on predetermined dashboards and observability tools.

Business impact drives observability investment beyond operational concerns. System performance directly affects user experience, revenue, and customer satisfaction. Observable systems enable organizations to correlate technical metrics with business outcomes, quantifying the cost of performance degradations.

The Three Pillars: Metrics, Logs, and Traces

Metrics provide quantitative measurements of system behavior over time. These numerical values track resource utilization, request rates, error rates, and business KPIs. Metrics excel at identifying that problems exist and measuring their magnitude, though they lack the context to explain why issues occur.

Time series metrics enable trend analysis and capacity planning through historical data retention. By examining metric patterns over weeks or months, teams identify seasonal variations, growth trends, and emerging bottlenecks. Alerting systems trigger notifications when metrics exceed predefined thresholds.

Logs capture discrete events with detailed contextual information about system activities. Each log entry records what happened, when it occurred, and relevant metadata. Logs provide the narrative detail necessary to reconstruct sequences of events during incident investigations.

Structured logging transforms logs into queryable data rather than unstructured text. By emitting logs in JSON or other structured formats with consistent field names, teams can search, filter, and aggregate log data efficiently. This structure converts logs from diagnostic afterthoughts into first-class observability data.

Traces follow individual requests as they traverse distributed systems, creating a complete picture of transaction flow. Each trace consists of spans representing operations within services. This distributed context enables engineers to identify exactly where latency accumulates or errors originate across service boundaries.

The three pillars complement each other rather than competing. Metrics identify anomalies, logs provide contextual details, and traces reveal distributed interactions. Effective observability strategies integrate all three data types to create comprehensive visibility into system behavior.

Building Your Observability Stack Architecture

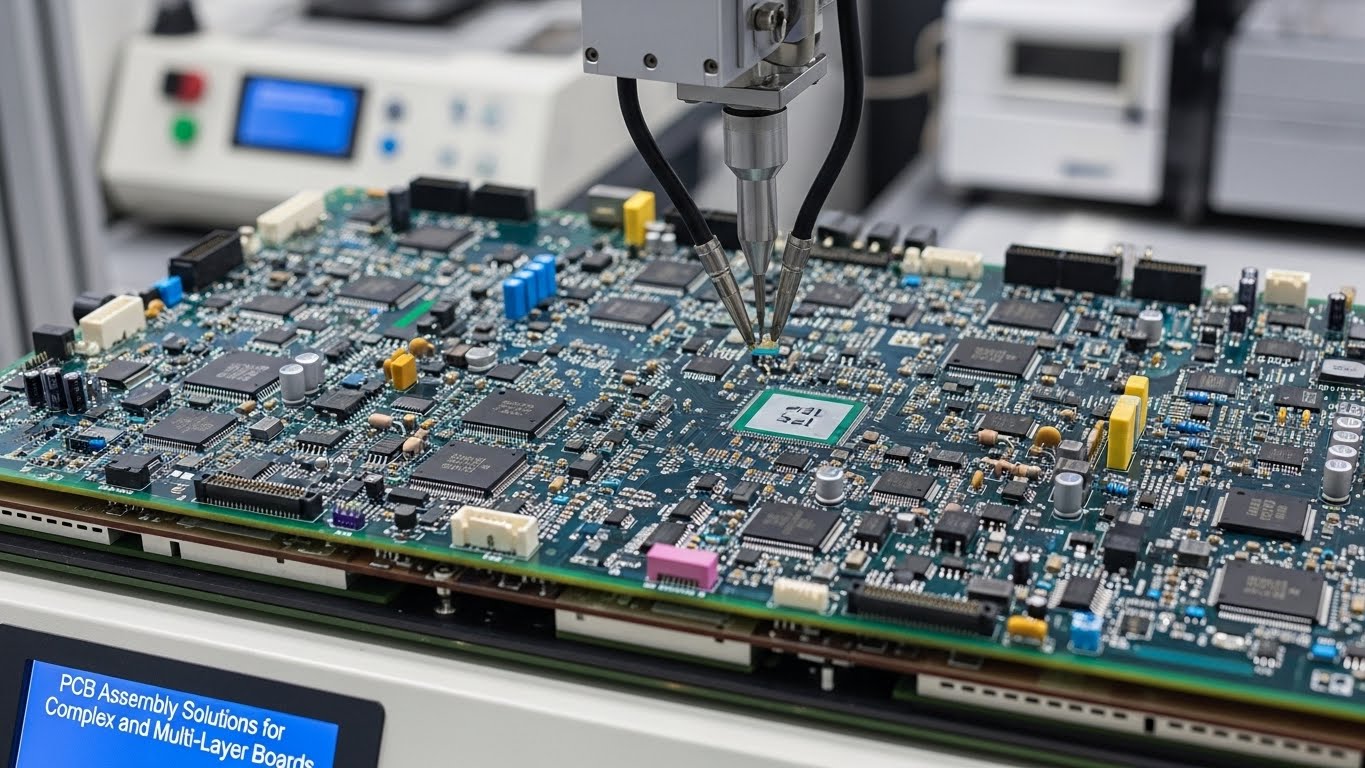

Collection agents gather observability data from applications and infrastructure. These agents instrument code, capture system metrics, aggregate logs, and sample traces. Agent deployment strategies balance coverage, performance overhead, and operational complexity across the entire infrastructure.

Centralized data stores consolidate observability data for analysis and querying. Time series databases handle metrics, log aggregation systems manage log data, and specialized trace stores organize distributed tracing information. Selecting appropriate storage systems for each data type optimizes both performance and cost.

Modern observability platforms increasingly unify metrics, logs, and traces in single systems. This consolidation simplifies correlation across data types-engineers can pivot from a metric spike to relevant logs and traces without switching tools. Unified platforms reduce cognitive load during high-pressure incident response.

Data pipelines process and enrich observability data before storage. These pipelines perform tasks like sampling traces to reduce volume, enriching events with metadata, and routing data to multiple destinations. Well-designed pipelines ensure data quality while managing costs.

Query engines provide the interface for exploring observability data. Advanced query capabilities enable complex aggregations, joins across data types, and ad-hoc analysis. The flexibility to ask new questions separates true observability from rigid monitoring dashboards.

Visualization layers transform raw data into actionable insights through dashboards and reports. Effective visualizations highlight anomalies, show trends, and provide context for decision-making. Well-designed dashboards serve both operational troubleshooting and strategic planning needs.

Metrics Collection and Aggregation Strategies

Pull-based collection models have monitoring systems scrape metrics from application endpoints periodically. This approach simplifies application code since services only expose metrics without managing delivery. Scraping intervals typically range from 10 to 60 seconds, balancing freshness with collection overhead.

Push-based collection sends metrics from applications to collection endpoints. This model works well for short-lived processes like batch jobs that may not exist when pull collectors attempt scraping. Push systems require more sophisticated buffering and retry logic within applications.

Service discovery mechanisms automatically identify monitoring targets as infrastructure scales dynamically. Integration with orchestration platforms like Kubernetes enables monitoring systems to discover new services without manual configuration. This automation proves essential in cloud-native environments where services appear and disappear frequently.

Metric cardinality management prevents dimensional explosion that overwhelms storage systems. When label combinations multiply into millions of unique time series, storage costs skyrocket and query performance degrades. Implementing cardinality limits and monitoring growth patterns maintains system health.

Pre-aggregation reduces data volume by computing rollups before storage. For high-frequency metrics, storing every sample becomes prohibitively expensive. Aggregating values into larger time buckets reduces storage requirements while maintaining analytical value for long-term trends.

Log Management in Distributed Cloud Systems

Centralized log aggregation collects logs from all services into searchable repositories. Distributed systems generate logs across numerous hosts, making centralized collection essential for correlation and analysis. Log aggregation pipelines normalize formats and enrich entries with contextual metadata.

Structured logging standards improve searchability and analysis capabilities. Establishing consistent field names, timestamp formats, and severity levels across all services enables powerful querying. Teams can filter logs by specific fields rather than resorting to regular expression searches through unstructured text.

Log sampling reduces volume while preserving analytical value. Storing every debug log from high-traffic services costs enormous amounts while providing limited additional value. Sampling strategies retain all error logs, sample warning logs, and heavily sample informational logs.

Correlation IDs link log entries across service boundaries. When requests traverse multiple services, injecting a unique identifier enables reconstruction of the complete transaction flow. These identifiers create a lightweight alternative to full distributed tracing for many use cases.

Log retention policies balance investigative needs against storage costs. Recent logs require fast access for active investigations, while older logs serve compliance and historical analysis. Tiered storage moves aging logs to cheaper storage while maintaining searchability.

Distributed Tracing for Microservices

Trace instrumentation captures timing and metadata for operations as requests flow through systems. Automatic instrumentation through agents or frameworks reduces development burden, while manual instrumentation provides granular control over what gets captured. Hybrid approaches combine both strategies.

Span relationships create the parent-child hierarchies that represent request flow. A parent span in one service spawns child spans for downstream service calls. This hierarchical structure enables visualization of how requests propagate and where time accumulates.

Context propagation passes trace identifiers across service boundaries. When Service A calls Service B, it includes trace context in request headers or metadata. Service B extracts this context and creates spans linked to the original trace, maintaining distributed visibility.

Sampling strategies control what percentage of traces get recorded. Head-based sampling makes decisions at trace initiation, while tail-based sampling examines complete traces before deciding whether to retain them. Intelligent sampling preserves interesting traces like errors or slow requests while discarding routine successful transactions.

Trace analysis identifies performance bottlenecks and error sources across distributed systems. By examining trace data, engineers pinpoint which service calls introduce latency or generate errors. This capability dramatically reduces mean time to resolution during incidents.

Choosing the Right Observability Tools

Open-source solutions provide flexibility and eliminate vendor lock-in at the cost of operational responsibility. Teams must handle deployment, scaling, and maintenance themselves. Popular open-source options include Prometheus for metrics, Elasticsearch for logs, and Jaeger for traces.

Commercial observability platforms offer managed services that reduce operational burden. Vendors handle infrastructure management, updates, and scaling, allowing teams to focus on using observability data rather than operating systems. This convenience comes with subscription costs and potential vendor dependency.

Cloud-native integrations simplify deployment in public cloud environments. Observability tools that integrate seamlessly with AWS CloudWatch, Azure Monitor, or Google Cloud Operations reduce implementation effort. Native integrations often provide automatic discovery and configuration.

Feature requirements vary based on organizational maturity and use cases. Small teams might prioritize simplicity and low maintenance, while large enterprises need advanced analytics, security features, and compliance capabilities. Evaluating current and future needs prevents costly migrations later.

Total cost of ownership extends beyond licensing fees. Consider data ingestion costs, storage expenses, query volume charges, and required operational expertise. Some vendors charge based on data volume, while others use host-based or user-based pricing models.

Cost Optimization for Cloud Observability

Data volume management represents the largest cost lever in observability systems. Reducing ingested data through sampling, filtering, and aggregation directly decreases storage and processing costs. Strategic decisions about what data to collect prevent unnecessary expenses.

Retention policies should align with actual usage patterns. Teams often retain data far longer than necessary, incurring storage costs without corresponding value. Analyzing query patterns reveals which data actually gets accessed and how far back queries typically reach.

Hot-warm-cold storage tiers reduce costs for infrequently accessed data. Recent data resides on fast storage for active investigations, while older data moves to cheaper storage with slower access. This tiering maintains data availability while optimizing costs.

Query optimization reduces computational costs and improves performance. Inefficient queries that scan excessive data waste resources and money. Implementing query limits, encouraging use of time bounds, and optimizing common queries all reduce costs.

Rightsizing infrastructure prevents overprovisioning waste. Observability systems often run on oversized infrastructure “just in case” rather than matching actual load. Regular capacity reviews identify opportunities to reduce resource allocation.

Alerting and Incident Response Best Practices

Alert design should minimize false positives while ensuring critical issues surface quickly. Every alert should have clear remediation steps and represent genuine impact to users or business operations. Alert fatigue from excessive notifications erodes on-call engineer effectiveness.

Severity classifications help prioritize response efforts appropriately. Critical alerts require immediate response and escalation, while warning-level alerts might batch for review during business hours. Clear severity definitions prevent confusion during incidents.

Alert grouping reduces notification storms during widespread outages. When cascading failures affect multiple services, grouping related alerts prevents overwhelming on-call engineers with duplicate notifications. Intelligent grouping presents coherent incident narratives rather than scattered symptoms.

Runbooks embedded in alerts accelerate response by providing immediate guidance. Including diagnostic queries, common fixes, and escalation paths within alert notifications helps on-call engineers respond effectively even when unfamiliar with affected systems.

Post-incident reviews transform outages into learning opportunities. Blameless postmortems examine what happened, why it happened, and how to prevent recurrence. Action items from these reviews drive continuous improvement in system reliability.

Observability for Kubernetes and Container Environments

Container-level metrics capture resource utilization and application performance within pods. Kubernetes exposes rich metrics about container CPU, memory, disk, and network usage. Monitoring these metrics reveals resource constraints and helps optimize container resource requests and limits.

The storage engine organizes data into time-sharded blocks or chunks. Each block covers a specific time range and contains compressed data for multiple metrics. This organization enables efficient pruning during queries-the database can skip entire blocks that fall outside the requested time window. Similarly, just as TSDBs optimize data access for efficiency, websites can optimize their content structure and search visibility using professional SEO services in London.

Service mesh observability provides visibility into microservice communication. Service meshes like Istio or Linkerd capture detailed metrics and traces for service-to-service calls. This instrumentation happens transparently without requiring application code changes.

Kubernetes events provide operational context about cluster activities. Events record pod scheduling decisions, configuration changes, and infrastructure issues. Correlating application metrics with cluster events helps explain anomalies caused by infrastructure changes.

Multi-cluster observability enables consistent visibility across distributed Kubernetes deployments. Organizations running multiple clusters need unified observability to understand system-wide behavior. Cross-cluster correlation becomes essential for debugging issues affecting multiple environments.

Dynamic label management handles the ephemeral nature of container environments. Containers appear and disappear constantly as services scale, requiring observability systems that adapt to changing infrastructure. Proper label strategies maintain metric continuity despite infrastructure churn.

Common Cloud Observability Challenges and Solutions

Data volume growth outpaces budget allocations as infrastructure scales. The cost of comprehensive observability can approach or exceed infrastructure costs themselves. Strategic sampling, aggregation, and retention policies control growth while maintaining investigative capabilities.

Alert fatigue undermines on-call effectiveness when notification volumes become overwhelming. Establishing clear alert criteria, implementing alert grouping, and regularly reviewing alert usefulness prevents fatigue. Automated remediation reduces alerts that require human intervention.

Tool sprawl creates silos when different teams adopt incompatible observability solutions. Standardizing on common platforms or ensuring interoperability between tools prevents data fragmentation. Unified observability enables collaboration during cross-team incidents.

Skill gaps prevent teams from extracting full value from observability investments. Training engineers on effective query techniques, dashboard design, and incident response maximizes return on observability spending. Building observability expertise should be ongoing organizational priorities.

Compliance requirements complicate data retention and access controls. Observability data often contains sensitive information subject to regulations like GDPR or HIPAA. Implementing proper access controls, data masking, and retention policies ensures compliance while maintaining investigative capabilities.

Future Trends in Cloud Observability

Artificial intelligence and machine learning increasingly automate anomaly detection and root cause analysis. Instead of engineers manually analyzing data, AI systems identify unusual patterns and suggest probable causes. This automation reduces mean time to detection and resolution.

OpenTelemetry emerges as the standard for observability instrumentation. This vendor-neutral framework provides consistent APIs and SDKs for metrics, logs, and traces across programming languages. Adoption of OpenTelemetry reduces vendor lock-in and improves interoperability.

Continuous profiling adds code-level performance insights to observability portfolios. By continuously sampling application performance data, teams identify inefficient code paths and optimization opportunities. This capability bridges the gap between observability and performance engineering.

Business observability connects technical metrics to business outcomes. Beyond tracking system health, organizations monitor user experience, conversion rates, and revenue impact. This alignment helps prioritize engineering efforts based on business value.

Edge computing introduces new observability challenges as workloads move closer to users. Traditional centralized observability approaches struggle with edge latency and bandwidth constraints. Distributed observability architectures that process data locally before selective aggregation address these challenges.